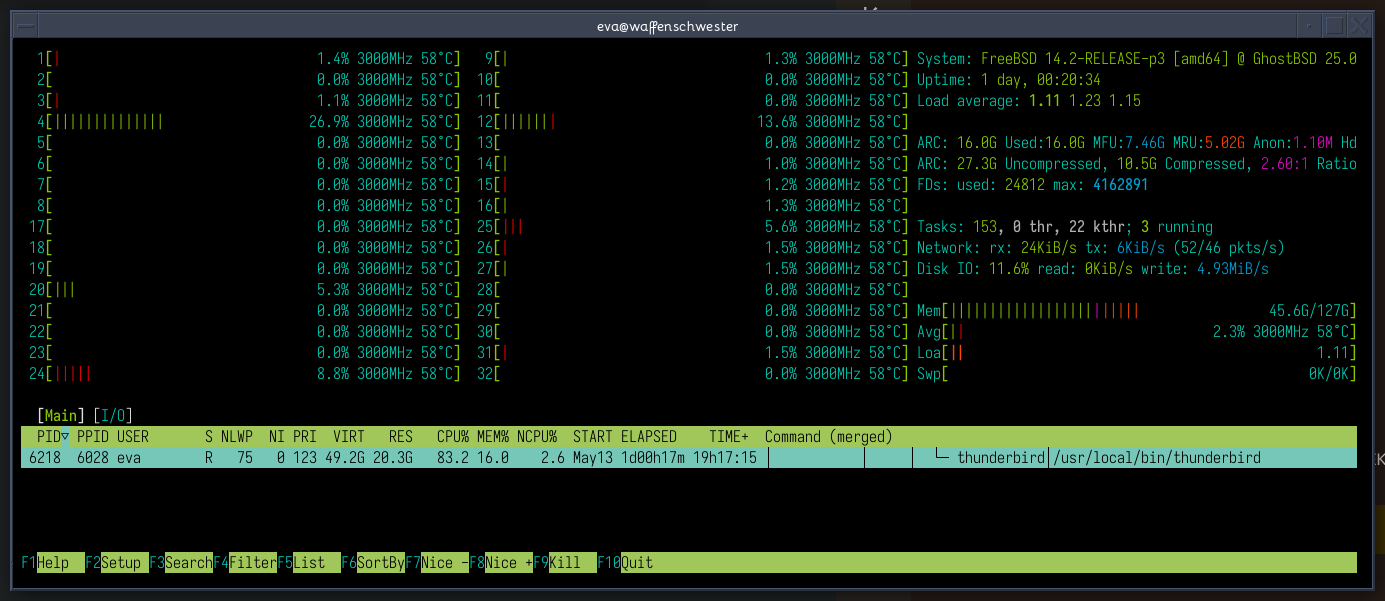

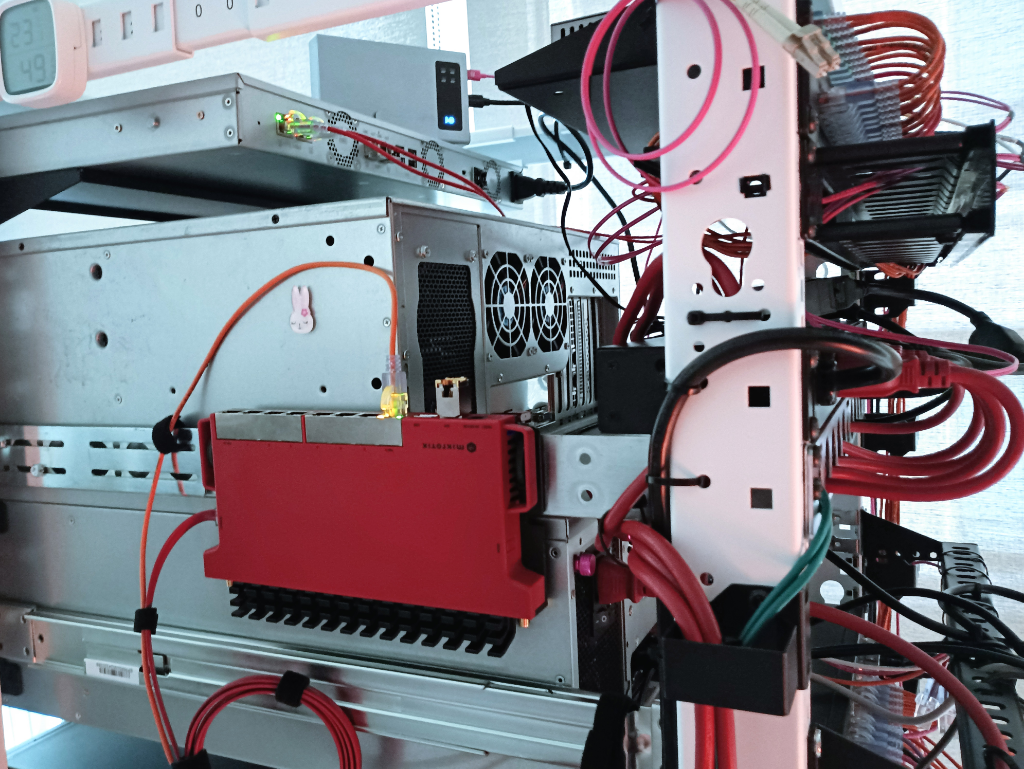

Sunday offers an EOD and EOW wrap up thoughts, with some meandering grammatical expressionism. Present state of this week’s hardware resource aggregation into one of the LLM research boxes is… 98%. The data migration from earlier today is complete, with ZFS offering some enjoyable numbers.

zpool scrub cranking out 19.6G/s, with 2.74T scanned at approx 2min, with the pool predicting a total of 38min to complete

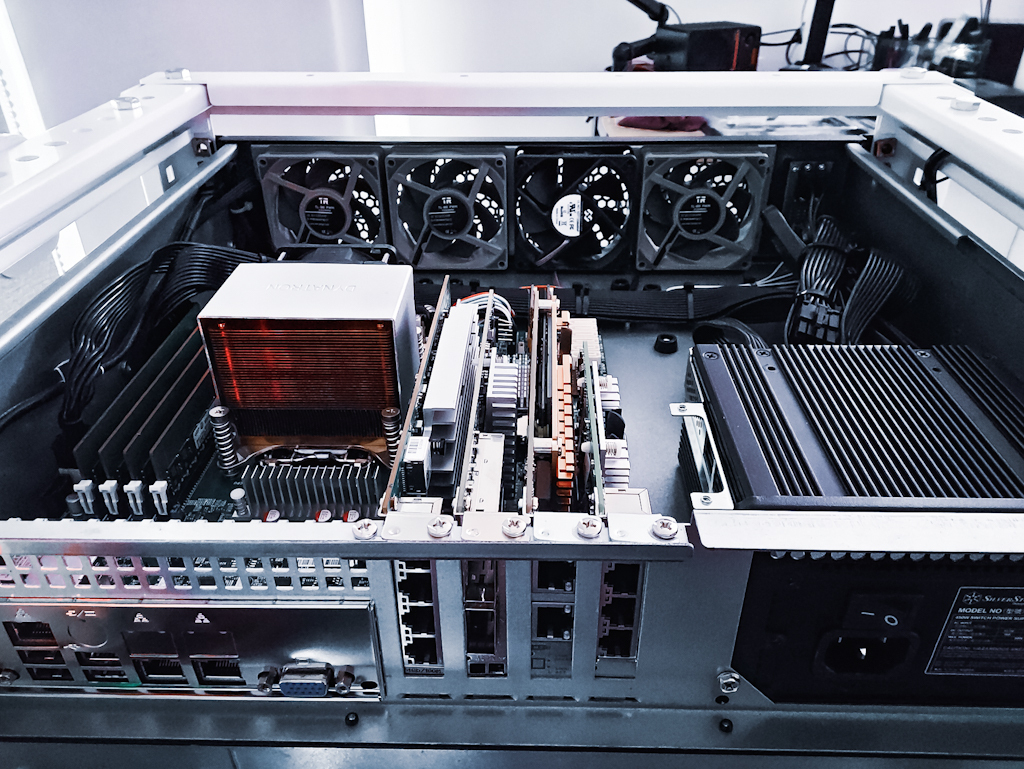

While building the spec and futzing with the BOM, one of my priorities was to ensure zero PCIe lane contention, with I/O going direct to 64 cores at 3.5GHz peaks, and eight memory channels in use (4x ECC-REG + 4x NVDIMM). All of those conditions have been benchmarking well so far; yay for meeting personally set expectations. 🤗

I’d say this is mostly pretty decent for a draid1 array with NVMe on a single-socket Xeon ICX box, and so far much better than the dual socket Ice Lake boxes that I was benching at ${OldOrg}. The recurring two-socket issue on this Xeon generation, and on its successor Sapphire Rapids, is that there’s too much cross-socket comms unless specific choices are made with PCIe peripherals and specific choices with BIOS tuning and kernel settings and core-pinning; all of which can be time consuming at a minimum, and head scratching with “Just call Premier Support!” at the far end, unless you know better.

This should not come as a surprise to anyone who has been benchmarking multi-socket Xeons ever since Xeons were brought into existence. Having had that experience, and because “data + facts + personal experience == real answers”, it was simple to predict performance concerns related to cross-socket latency, and don’t forget PCIe slot to socket sequencing and NUMA domain crossing, especially when optimizing for high-throughput network based storage transfers. If you scaled a 1 socket to 2 to 4 on the old Nehalem-EX based Xeons, then you know what I mean - they could never match the classic Big Iron vendors' quad-socket designs like Sun’s SPARC T3 and M3 series, and certainly not competitive to IBM’s POWER7 options.

Anyway, there’s a little game to play in storage server land called, “high density rack-scale storage is more efficient with single socket boards, using a high-lane count, high-clock, big cache SKUs”. This isn’t the time for a lesson in predictive algorithms necessary for resource optimization, ones which should be used when determining cost/benefit analysis of horizontal vs vertical (or combined) storage system scaling at the rack-level; but it is time for me to get off of the computer and eat some cherries and watch one of the alternate endings for Cyberpunk 2077 Phantom Liberty!

And really, for a Sunday evening, remaining at this standing desk in the office-lab is not appealing, particularly due to the “it’s just too damn hot for pants!" environment, even with the A/C on full blast (common metric for this lab, though it’s fine weather for a skirt).

PS, the pool scub finished with zero errors. I am pleased. Migration complete.

#linux #zfs #xeon #intel #ibm #sunmicrosystems #storage #hardware #engineering #memories