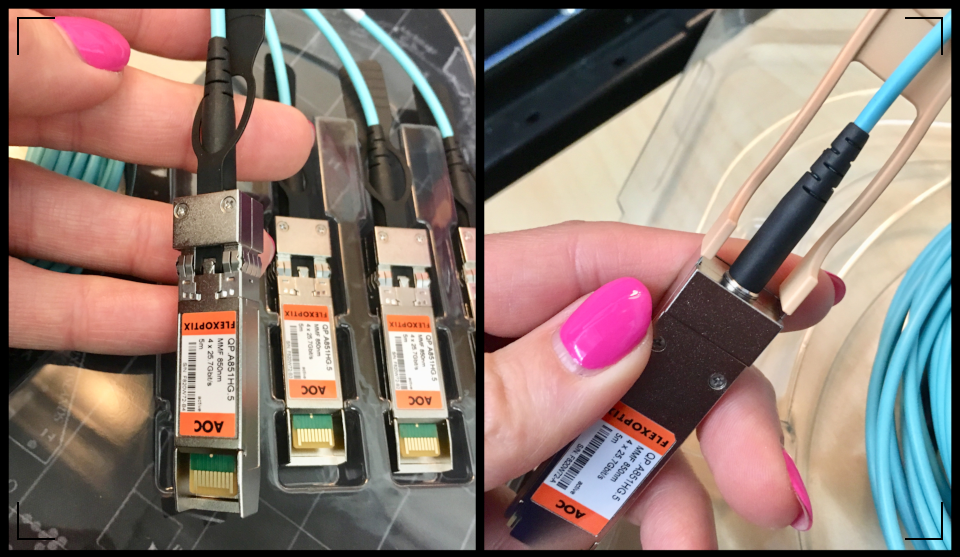

Generally when deploying DAC for inter-rack network connections, particularly the quad-breakout heavy gauge Mellanox type, I'll go with the RU waterfall–fanout method. If I'm using regular fiber-optics and MMF/SMF then the calculations sometimes change, depending on whether those are multi-lane MPO to single-port fanouts or port-to-port direct connections. Anyway, those acronyms aside, let's move on.

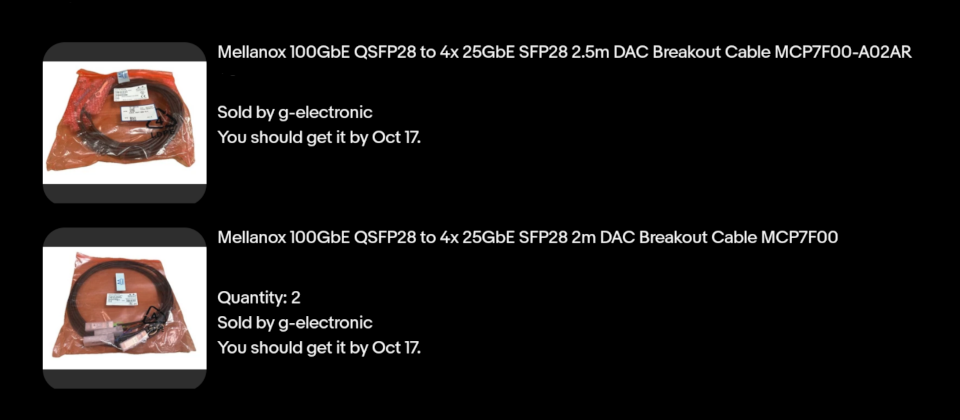

In preparation for a hardware refresh and much needed colocation visit, I've ordered additional Mellanox DPUs. Those require a few additional cables to augment the existing hardware stockpile. I already have some 100G to 4x 25G breakouts, but not enough, and not in the correct lengths. Yay for ebay, all acquired for under $100 as "new old stock".

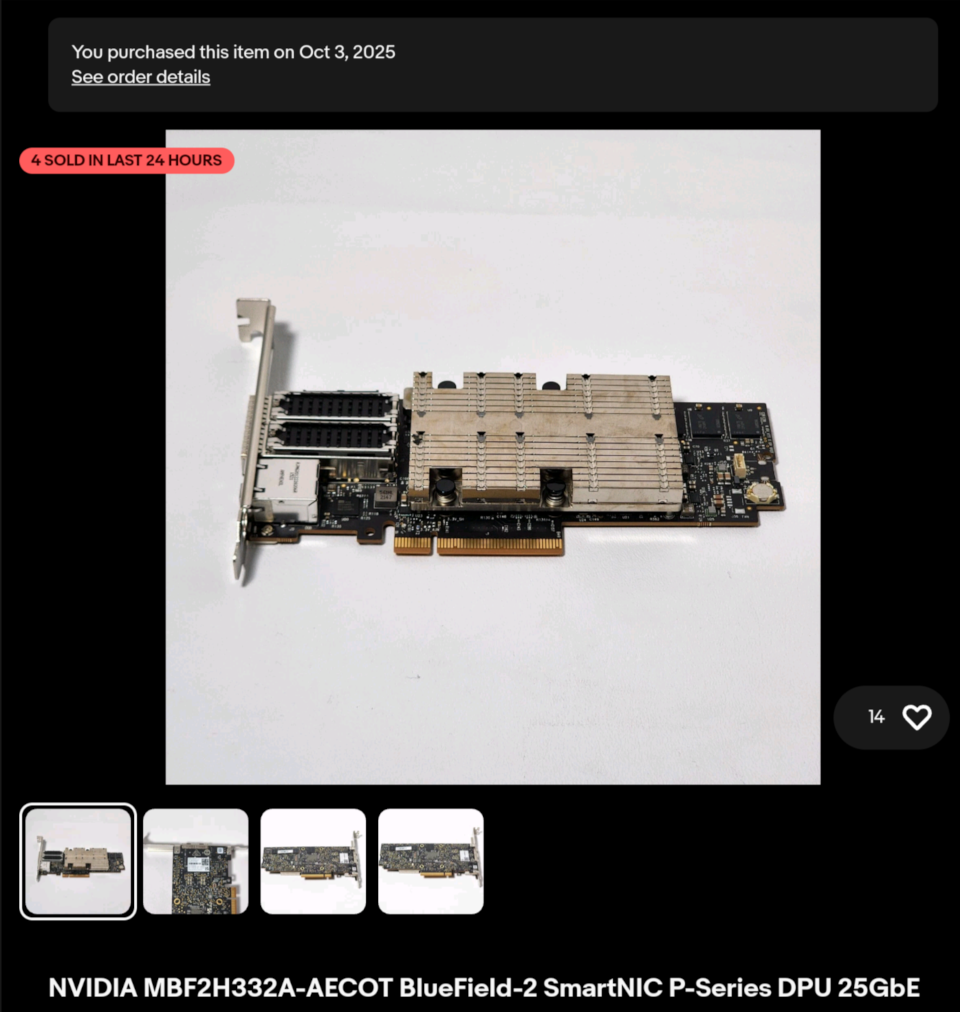

What do those DPUs look like?

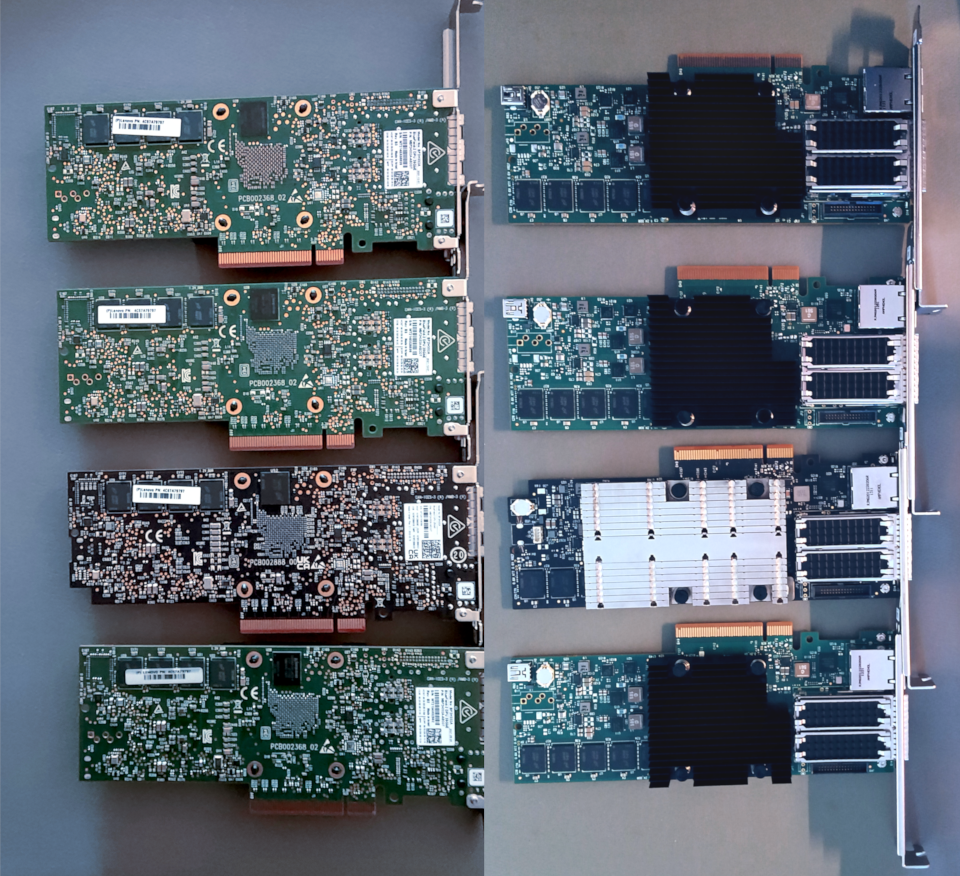

Well, for starters they don't look exactly like these old ConnectX-3 QDR Infiniband NICs but the DAC are similar. Mellanox changed their heatsink aesthetic along the way, and the heatsinks are far larger on the newer chipsets, with no less cooling required (~200+ LFM).

Once the DPUs arrive - which is supposed to be today - I'll take some photos and clean all of the PCBs and traces and slot connectors. In the interim, here's the eBay photo for reference.

The big question... spend precious time with the R630 cluster in cab68, remove their ConnectX-4 Dual-Port 100G NICs, or install the BF2 DPUs to the cluster of IBM LC922 POWER9 systems in cab70... hmmmm.. 🤔 This decision will not be completed today. So, let's think about cable maps and rack units.

Here's a lazy ASCII diagram

Showing three lengths of DAC being used for rack-height designated connections, each cable SKU being 0.5M longer/shorter to account for the destination system's location. DAC is heavy, so any time we can make the cable runs shorter we'll (perhaps marginally) reduce latency while reducing load on the rack-cabling organizers, and generally resulting in a cleaner layout. The following diagram is not to scale for either cab68 or cab70, it simply shows how the waterfall method uses length-based drops.

+----------------------------------------------------------+

| RU42 | [Leaf Switch, QSFP28/56] |

| | [P01..P16] |

| | | |

| | +-DAC-1 1.5m ——————————> [RU38] Server-A |

| | +-DAC-2 2.0m ————————————> [RU32] Server-B |

| | +-DAC-3 2.0m ————————————> [RU26] Server-C |

| | +-DAC-4 2.5m ———————————————> [RU20] Server-D |

+----------------------------------------------------------+

Labeling scheme:

Leaf ports: P01–P16 (logical)

Designators: 4x 25G lanes per 100G QSFP28 port (physical)

Servers: A,B,C,D with SFP28 1–4 (A-1, A-2, A-3, A-4)

Cables: DAC-1..4 with length tag (DAC-3 2.0m)

Where are these new DAC?

On their way... hopefully sooner than the stated arrival.

[update] Guess What Showed Up?

One of the correct SKU with correct firmware and PCB revision (MBF2H332A-AECOT Rev-C1 2022-02-15), and three of the same SKU without the correct firmware or PCB revision. So, three of them will not work for this project, and in an ideal world the vendor will reship the correct ones quickly.